Unmasking AI Agents Who Is Really Running the Show

AI agents are exploding in popularity, transforming from research projects into vital components of business operations. Security experts at OWASP are raising a crucial question: what about the identities of these non-human actors? Their research reveals how these autonomous software entities are now capable of making decisions, orchestrating complex tasks, and operating around the clock without direct human oversight. They're not just tools anymore; they're becoming a significant and permanent part of the digital workforce, demanding a fresh look at security protocols.

AI agents are making a splash, evolving from experimental toys to serious business tools. But are we really thinking about the security implications? The OWASP folks are, and they're pointing out that "Non-Human Identities" (NHIs) are critical to how secure your AI is. Their research shows that these software bots can make decisions, chain actions, and run 24/7 without a human babysitter. They're not just helpers; they're becoming a real part of your team.

Think about it: AI can now analyze customer info, whip up reports, manage your systems, and even deploy code – all autonomously. That's huge, but it also opens the door to some serious risks.

AI Agents: Only as Safe as Their Credentials

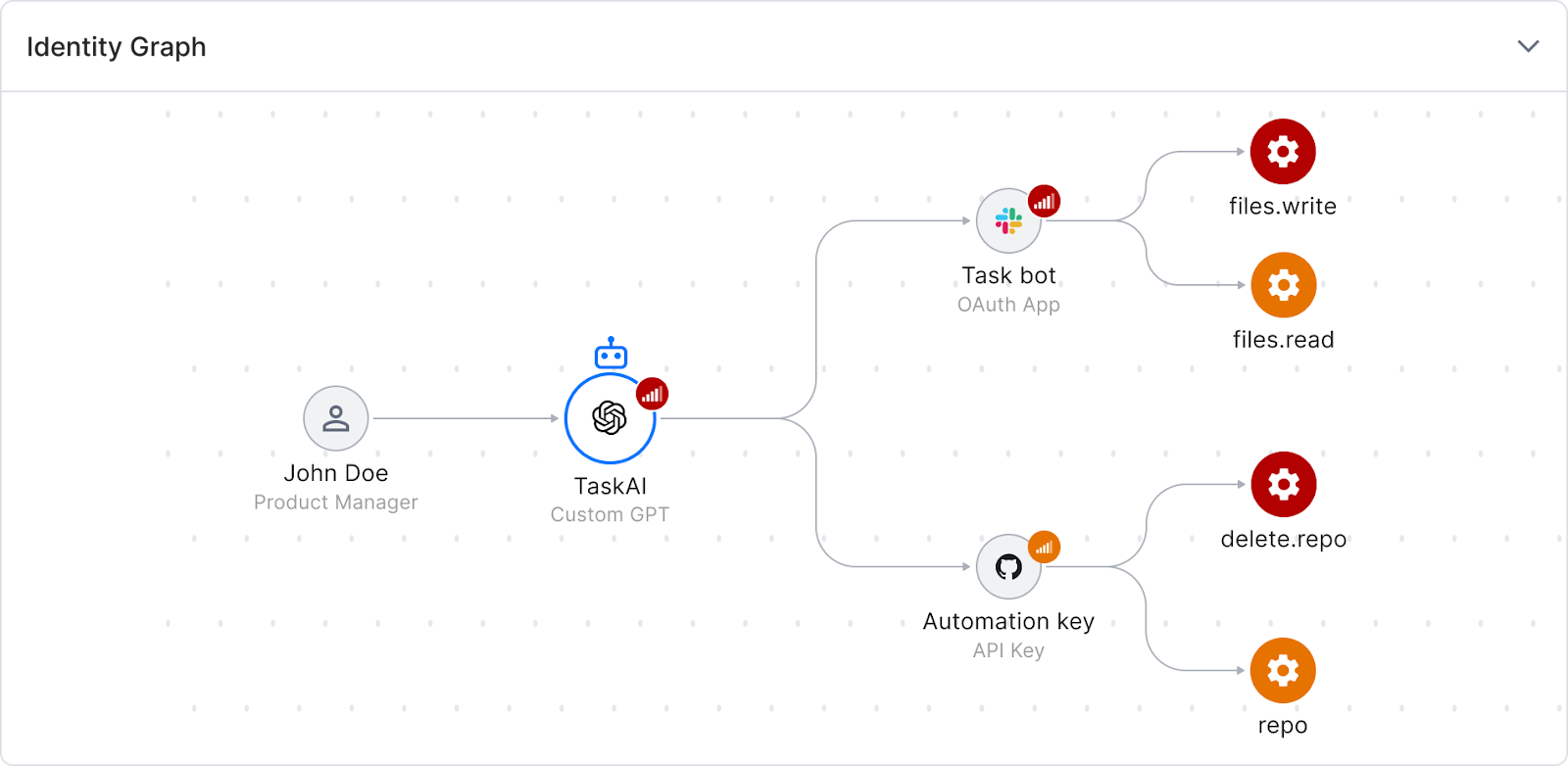

Here's the thing many security folks are missing: AI agents don't live in a vacuum. They need to access data, systems, and resources to do their jobs. And that access? It's often granted through NHIs – think API keys, service accounts, OAuth tokens, and other machine credentials. These are the keys to the kingdom!

These NHIs are like the connective tissue linking your AI to your critical systems. They dictate what your AI workforce can and can't do.

The key takeaway? While AI security is a broad topic, keeping your AI agents secure boils down to securing the NHIs they use. Control their access, monitor their permissions, and you've got a much better handle on the risks.

AI Agents: A Force Multiplier for NHI Risks

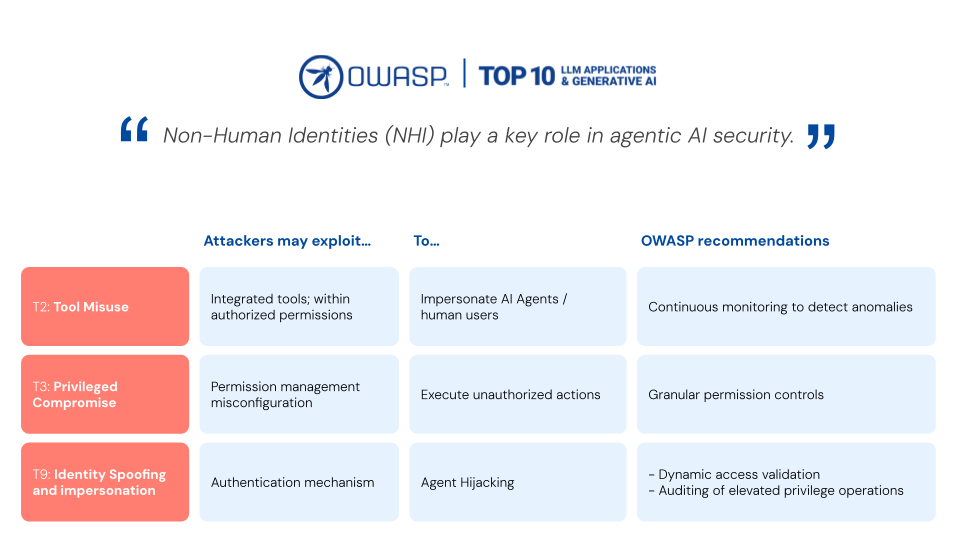

AI agents amplify existing NHI security problems in ways that traditional security just isn't ready for:

- Speed and Scale: They can execute thousands of actions in a blink.

- Chaining Tools: They link multiple tools and permissions in unpredictable ways.

- Continuous Operation: They run constantly, blurring session boundaries.

- Broad Access: They need wide-ranging system access to be effective.

- New Attack Vectors: Multi-agent setups create fresh vulnerabilities.

This all means increased complexity and potential for serious security holes:

- Shadow AI: Employees might deploy unregistered AI using existing API keys, creating hidden backdoors.

- Identity Spoofing: Attackers could hijack an AI agent's permissions and gain broad access across systems.

- AI Misuse: Compromised agents could trigger unauthorized workflows or steal data while looking like normal activity.

- Cross-System Exploitation: AI with access to multiple systems dramatically increases the impact of a breach.

Securing AI with Astrix

Astrix helps you get a grip on your AI security by giving you complete control over the NHIs powering your AI. Instead of flying blind, you get visibility into your AI, spot vulnerabilities, and stop threats before they cause damage.

By tying every AI agent to a human owner and monitoring for weird behavior, Astrix helps you scale AI confidently.

The result? Less risk, better compliance, and the freedom to innovate with AI without sacrificing security.

Stay Ahead of the Game

As AI adoption takes off, those who prioritize NHI security will reap the rewards while avoiding the headaches. In the AI era, your security depends on how well you manage the digital identities connecting your AI workforce to your key assets.

Want to dive deeper into Astrix and NHI security? Head over to astrix.security